In this blog post we'll cover sound from the very beginning. Those unfamiliar with audio will get a brief intro to a wide range of topics, and those with experience will encounter some new ways of thinking about concepts they're familiar with. This is a recommended pretutorial to the Intro to Intonal series which assumes a basic knowledge of audio.

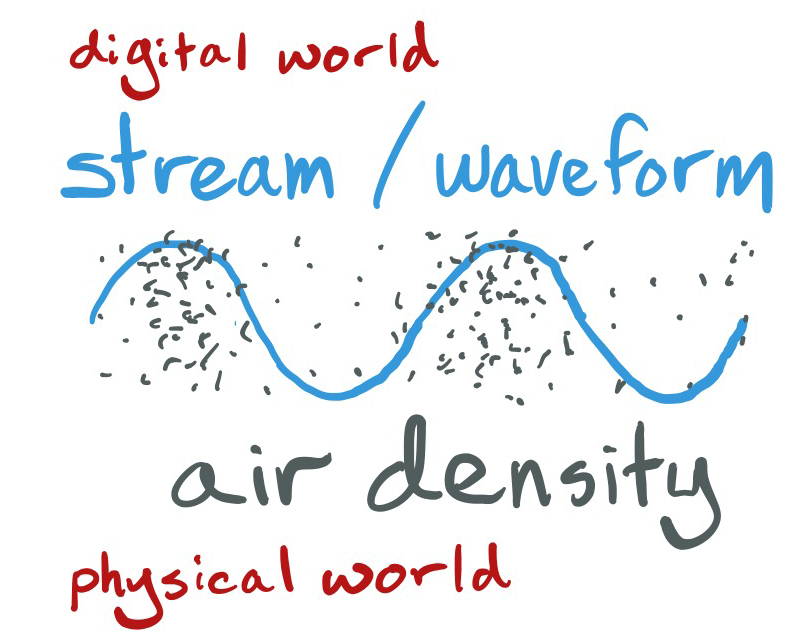

Everyone is probably most familiar with the waveform representation of audio. It shows how a stream of values representing audio changes over time.

But this is an abstraction - this waveform doesn't exist in physical reality. What it actually represents is a wave traveling through the air, like ripples in a pond or stop and go traffic on the highway.

Speakers and microphones are what we use to translate between waveforms and these ripples through the air. The mechanism is simple, despite what hifi manufacturers say to make it sound complex. The value of the waveform is the displacement of the speaker's or microphone's membrane controlled by an electromagnet, 1 for all the way forward and -1 for all the way back. This movement pushes the air, causing higher density, or pulls back, causing lower density.

In fact, there is not really a difference between speakers and microphones. You can use a speaker as a microphone and vice versa.

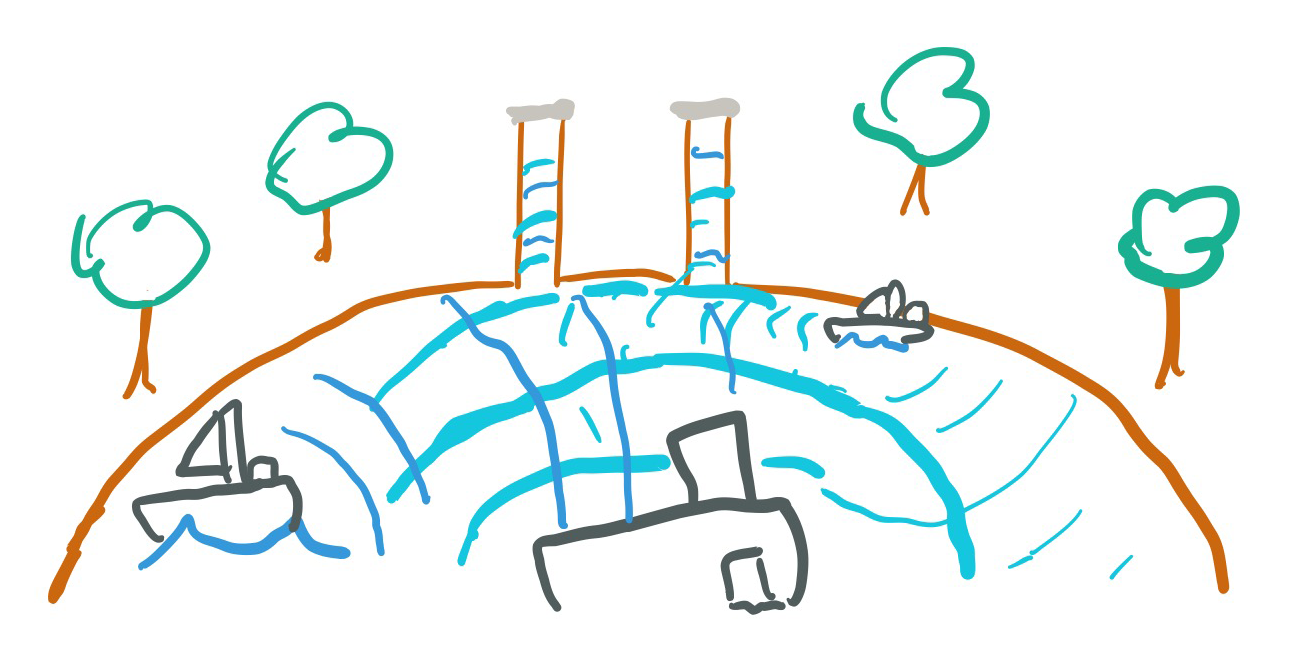

And our ear drum works in a very similar way. It's stunning how much we can piece things together with so little information. Someone once described it to me as a giant lake (the world) with two small canals running off of it (our ear canals) each with a thin handkerchief drapped across it (our ear drums). Just by looking at how the two handkerchiefs move to the ripples of the water we can tell how many boats are on the lake and for each their size, shape, and position.

Just as we extract that information about the boats from the handkerchiefs our ears and brain translate from the physical world to the perceptual world. Perceptual features, tightly coupled with the physical world, is how we think about sound.

Humans are pattern matching machines. A single event in isolation has no meaning. We can only glean some information when something repeats. Repetition is the basis of our perception of sound.

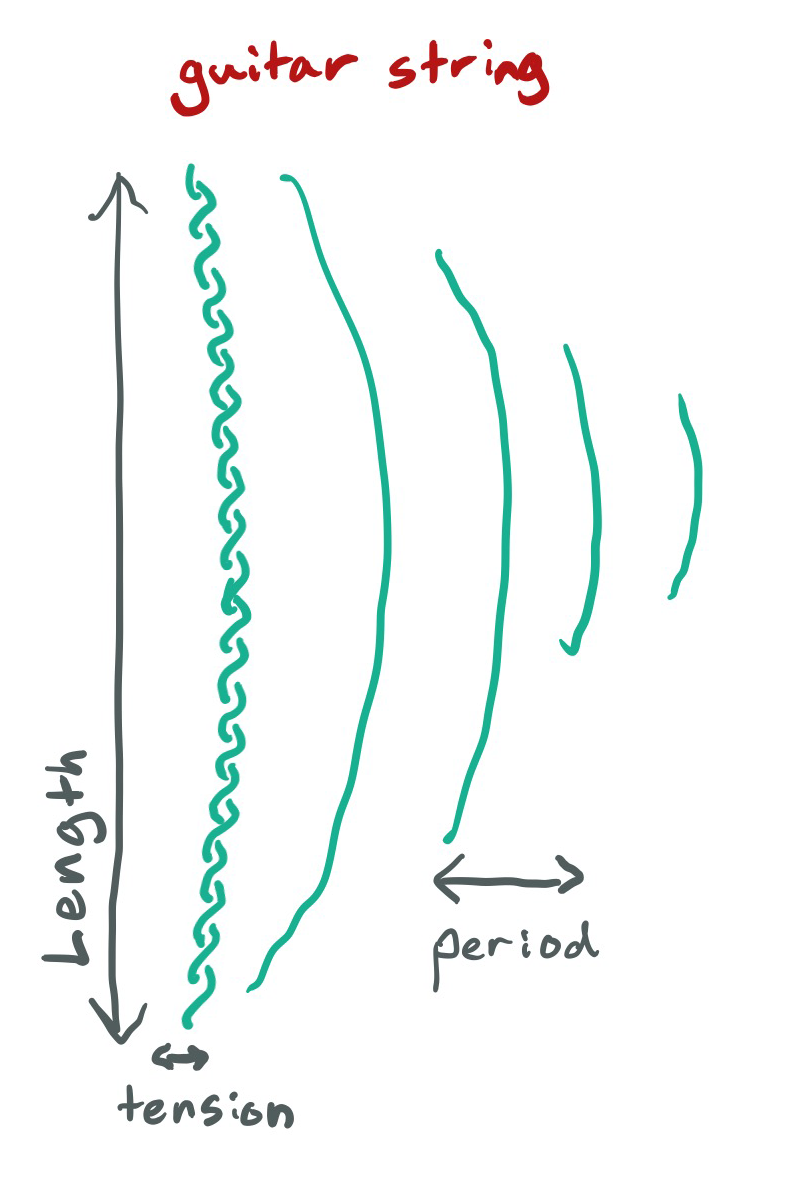

In the physical world, when an object is struck, plucked or excited by other means, it resonates (vibrates) at a speed depending on the material thickness and other properties. The vibrational resonance displaces the air around it just as the speaker does. Like the boat bobbing in a lake, we pick up some key information from the air it pushes around.

In physical terms, repetitions are measured in terms of frequency (typically as hertz or hz which is the number of repeats in a second) and period (the length of the repeat).

When a stream repeats at a high speed (greater than 20 times a second) we perceive pitch. We often describe pitch in terms of hz but they aren't the same as there are ways to trick our perception into hearing pitches that don't exist in the physical world and vice versa.

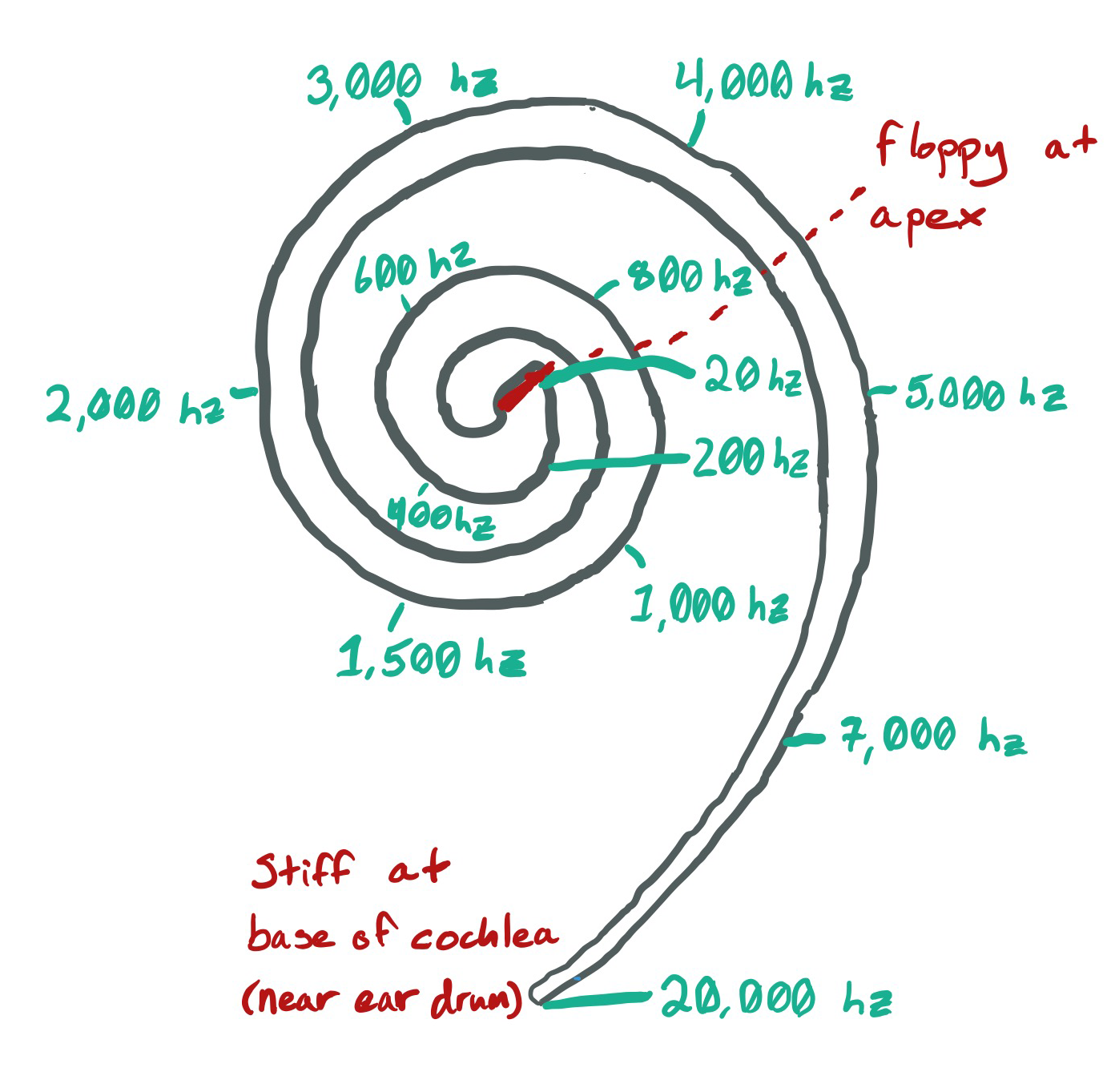

Inside our inner ear is the cochlea, a strange and beautiful spiral. When unrolled you can see it starts thick and tapers to a thin point. The cochlea is an amazing evolution - like all physical things it resonates at different frequencies based on the thickness at any point. Because it's organic, it can resonate at multiple places at once.

When sound enters the ear and resonates the cochlea, little hairs on it are activated and a signal is sent to the brain - there is a sound at this particular frequency. Just as objects resonate to produce specific frequencies based on their size, the cochlea resonates to read that resonance - it's a mirror of the outside world.

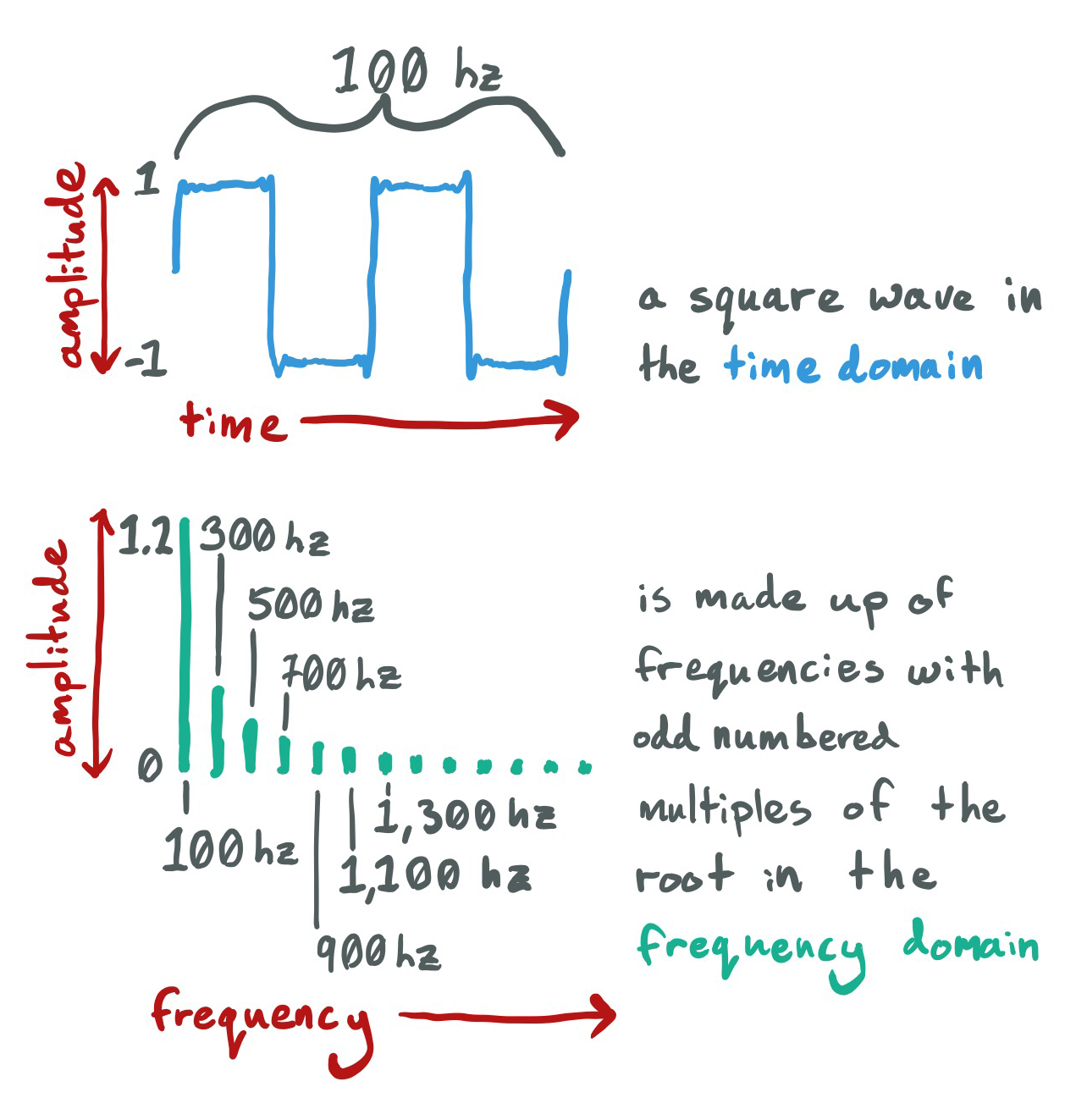

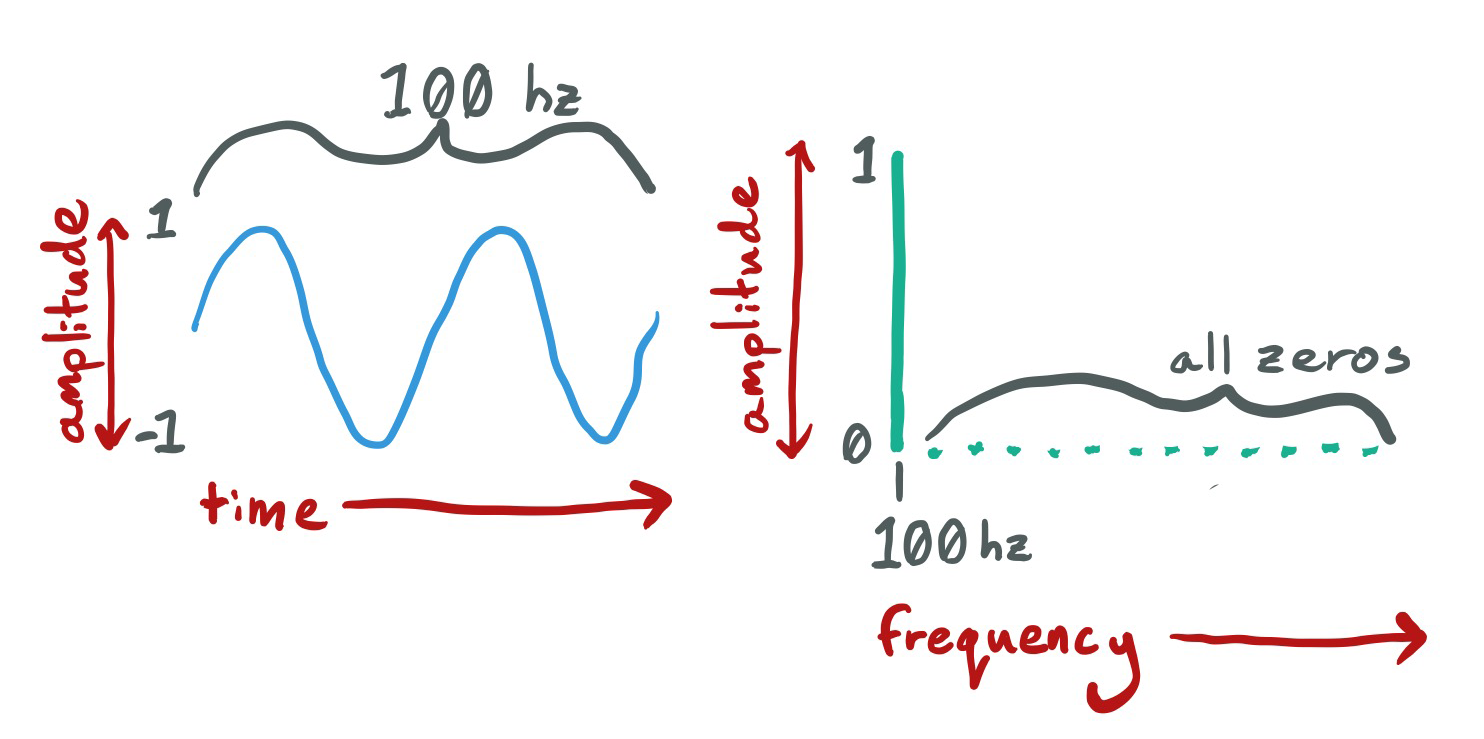

It's also a different representation of the same sound - how the air is displaced over time, which we show as a waveform is called the time domain. The frequencies that exist inside that waveform, picked up by the cochlea, is called the frequency domain, which we visualize as a frequency spectrum.

Outside of the ear, we use the fourier transform (the Fast Fourier Transform or FFT being the most well known version, though other versions exist) to translate between time domain and frequency domain. The purest form of resonance is the sine wave, which represents a single point in the frequency domain.

After leaving the cochlea, the physical ways our body processes sound end and our brain takes over. The processing of audio in the brain is called psychoacoustics. This is where our brain groups together different frequencies and guesses if they are from the same source. This can go wrong with completely bonkers results, but I'll leave that particular youtube rabbithole up to the reader. We'll stick to when the process goes right.

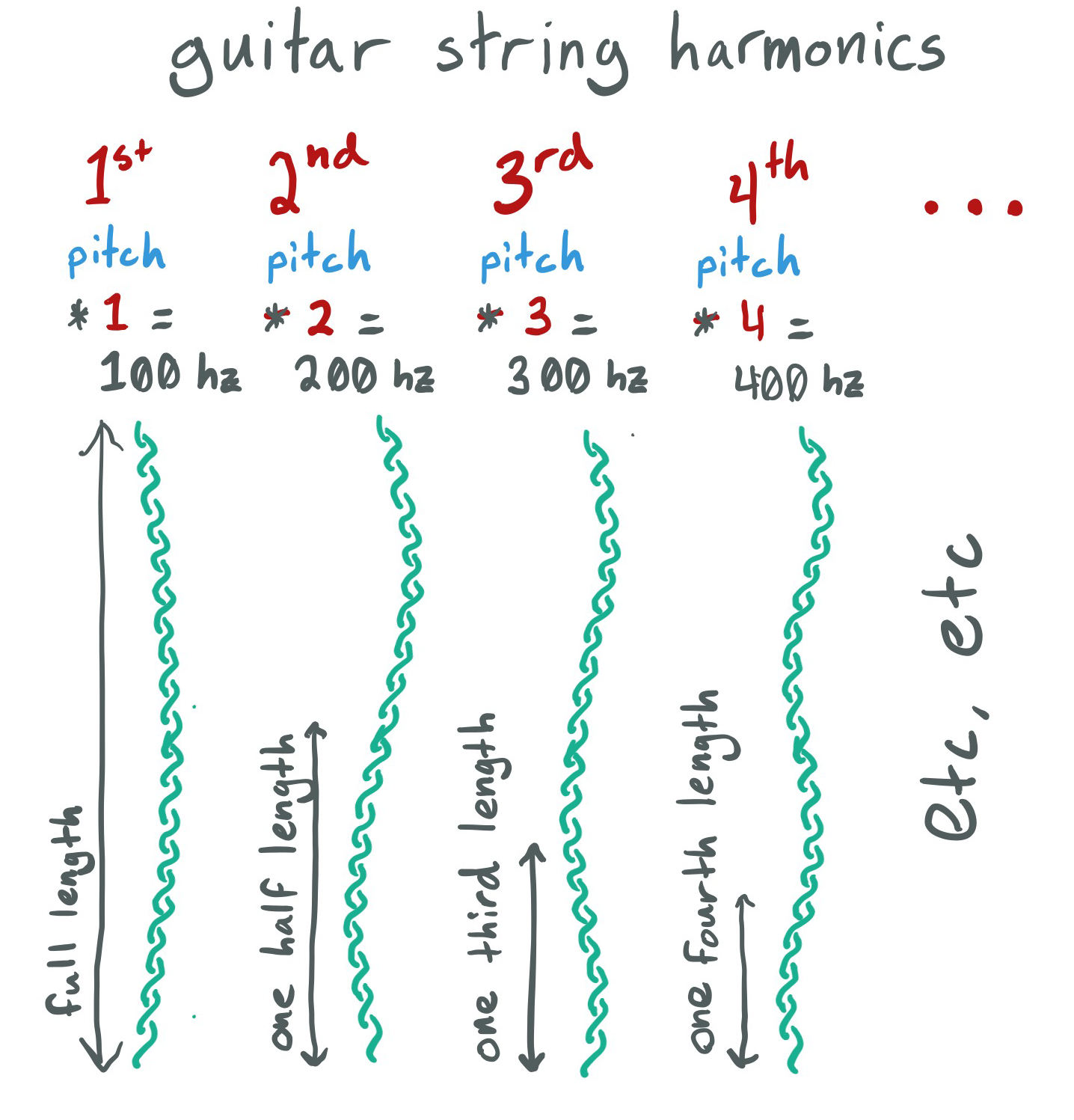

All physical objects resonate at multiple frequencies. In the physical world, they tend to resonant together at frequencies with simple relative ratios. The main pitch we perceive is called the fundamental frequency and frequencies at whole number ratios from the fundamental are called the harmonic frequencies.

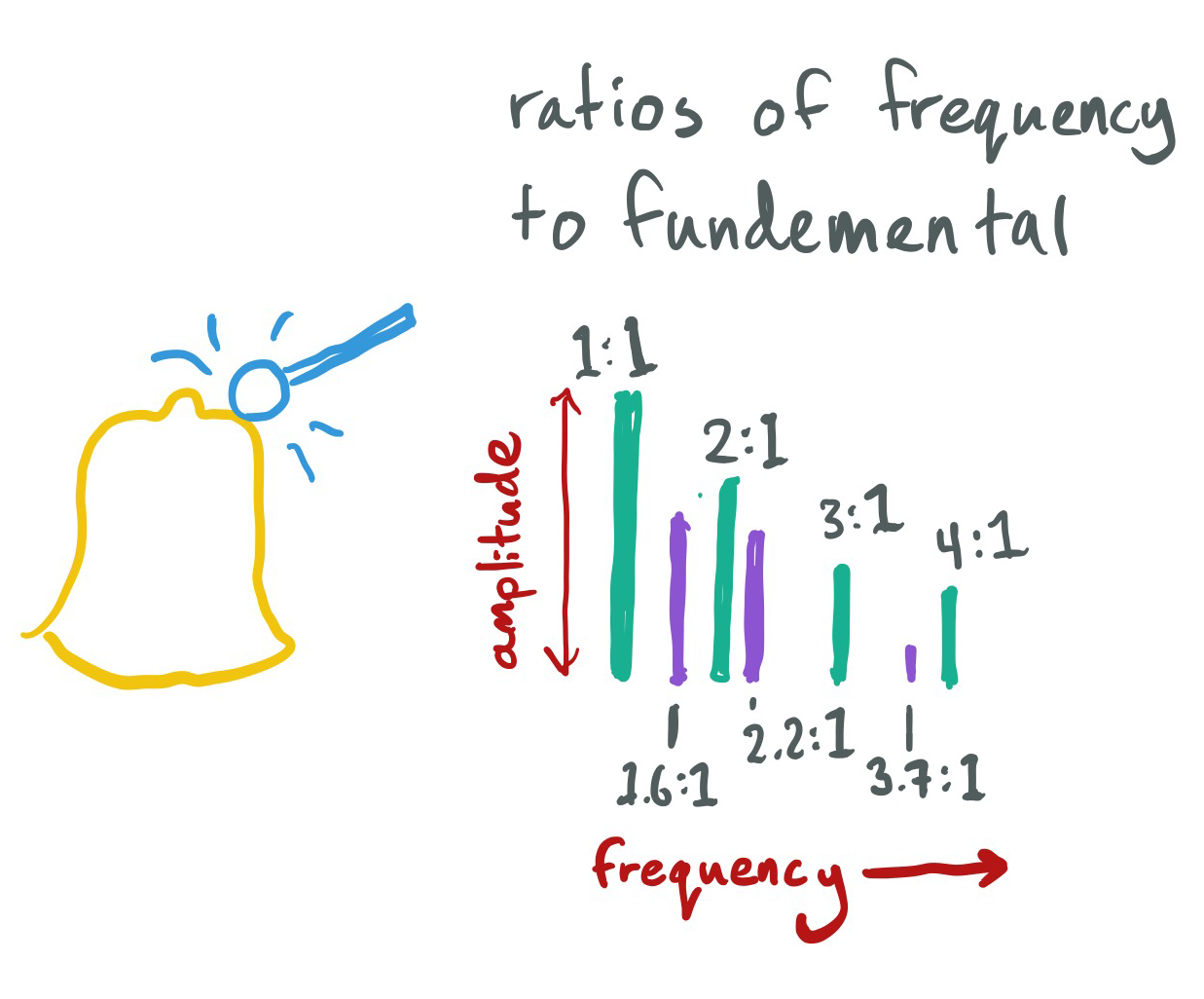

Some resonances are more intense than others, causing a higher amplitude in the resonance. Some resonances, like those caused by bowing a string, have a slow attack, meaning their amplitude slowly grows over time. Other resonances have a fast attack but a slow decay, like a piano string struck and echoing through the wood case. Metal objects, like bells. often have inharmonic resonances which tend to have fast attacks and decays. Each individual resonance within a single object has it's own amplitude, attack and decay, though they tend to be correlated (move together). When an object is struck, the object used to strike also resonates, typically with a fast attack and decay. These frequencies, called transients, are often correlated with the object being hit, making them blend together perceptually. Through life experience we learn how to group sounds and identify the source, and through ear training one can learn to pick apart the components of an object.

At the end of this process we perceive a set of grouped frequencies together as a single sound object with a single pitch at the fundamental. The amplitude and movement of each harmonic is perceived as the sound object's texture or color. The more a sound object contains pure ratio harmonics, the more tonal it sounds, giving a stronger perception of pitch. A bell becomes more tonal over time. When you strike a bell, it initially contains so many inharmonic frequencies, the pitch is difficult to determine, but as the inharmonic frequencies quickly die out the fundamental becomes stronger.

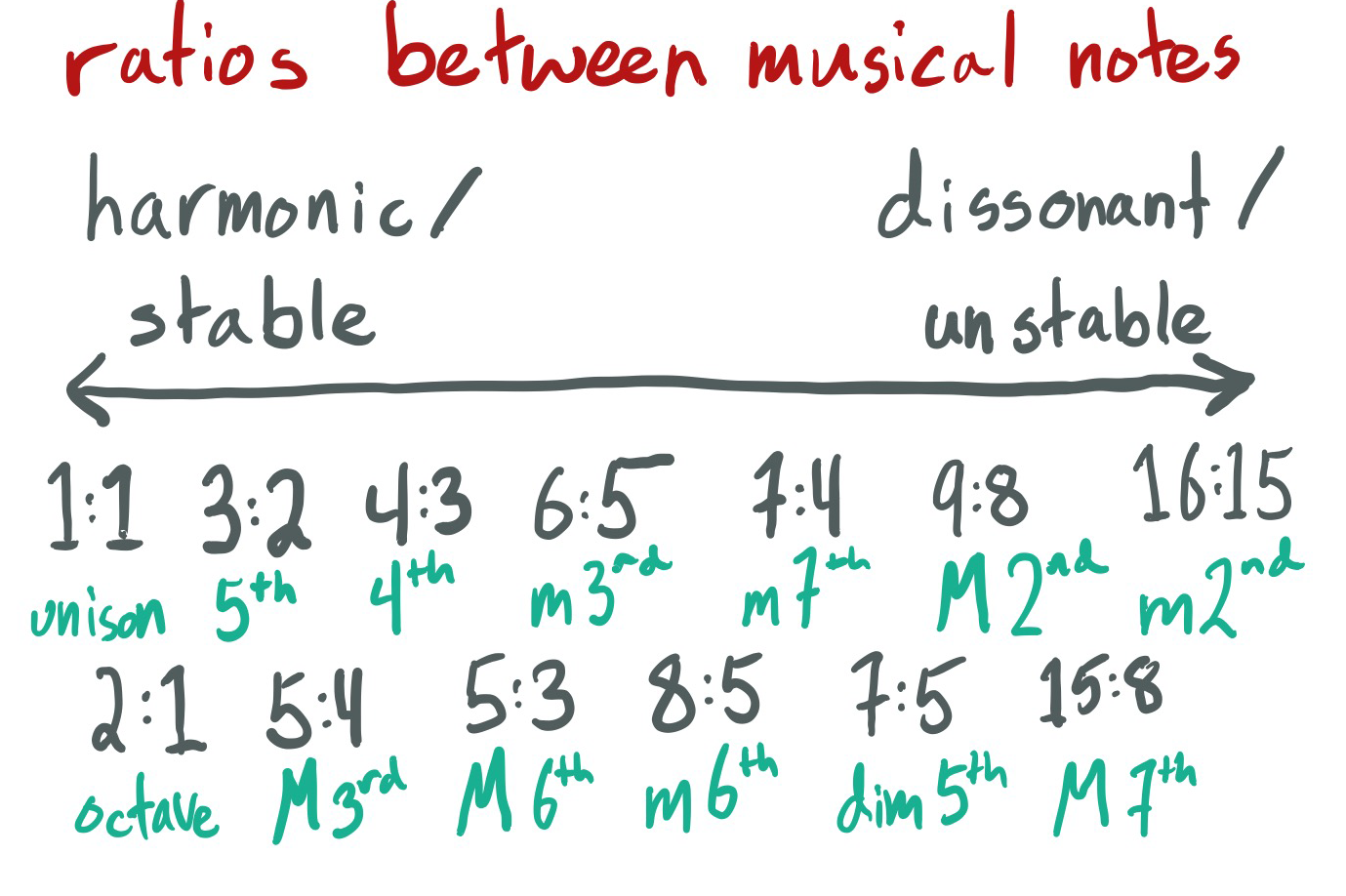

Just as we group together simple ratio frequencies into a single sound object, we group multiple sound objects with simple ratio pitches together, this is called harmony. When the sound objects have complex ratios, the result is dissonant. Mixing together sound objects that harmonize, we can form chords, the basis of modern music theory and outside the scope of this intro.

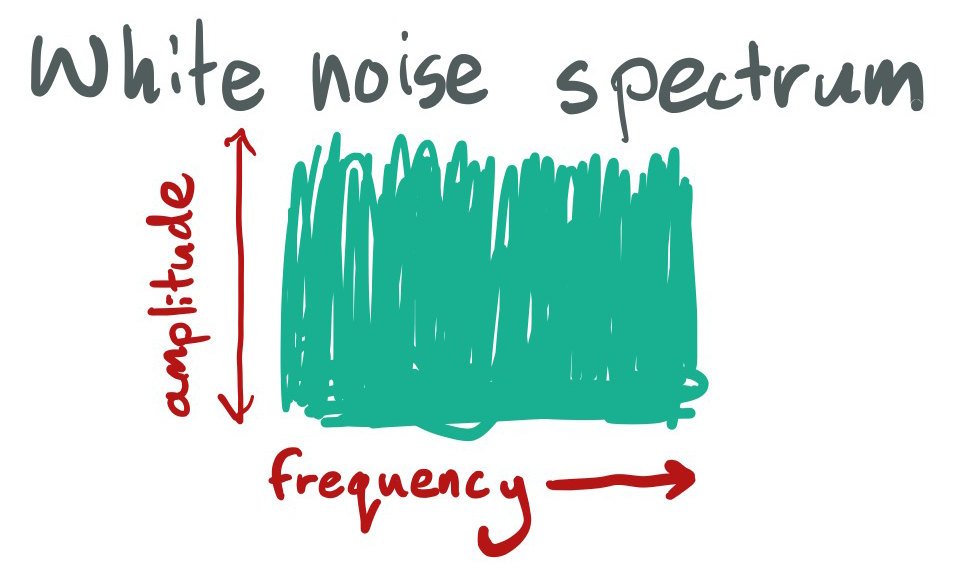

When a stream doesn't repeat, we perceive noise. This is shown in an FFT as containing all frequencies. Subpar noise generators do introduce some amount of repetition, and our brains perceive the slightest hint of repetition as having a tonal quality, affecting the color of the noises texture.

To summarize, sound is all about repetition - how things repeat, at what time scale, and what are the ratios between the frequencies of repetitions. Even the simplest music reveals depth of detail in these repetition. It's worth noting that none of these repeats, at the waveform, tempo, or section scales, needs to repeat exactly. In fact, repeating exactly has a lifeless and boring quality as exact repetition is rare in the physical world. Ironically no repetition noise also produces a lifeless and boring quality. Overuse of either causes ear fatigue.

We talked about repetition at the audio waveform scale and the tempo scale. You can easily recognize repetition at the section scale, the world of verses, choruses and bridges. But this isn't happening in a bubble, there is also repetition at the cultural scale. This is the world of expectations and symbols, where the sound of a movie gun has become divorced from the real world, where the same mariachi band sounds macho in one country and goofy in another.

Working with sound and music is a beautiful balancing act between repetition and novelty across all these intersecting time scales, building tension through ambiguous inharmonic pitches, shifts in tempo, unexpected bridges and breaking expectations, and releasing it by returning to warm tones, a steady beat, a familiar chorus and a melody that sounds both familiar and new.

The history of music is the history of innovation, a reflection of the cutting edge of the times. Any advances in technology, from wood carving to metal work, has quickly been adopted for musical means. Solo or small group performances became larger as communications improved and cities grew. Bach, a proud improviser who thought no-one could match his skill, helped invent the piano and used the growing printing industry to spread his compositions. Beethoven, in the midst of a growing middle class, ran his performances like a business. Intricate instruments like the saxophone only existed due to advances in material sciences.

The brilliant Ada Lovelace famously saw the impact computers could have on music:

Supposing for instance, that the fundamental relations of pitched sounds in the science of harmony and of musical composition were susceptible of such expression and adaptations, the engine might compose elaborate and scientific pieces of music of any degree of complexity or extent. — Countess of Lovelace Augusta Ada King

Broadly speaking, the greatest impact modern technology has had on sound is decoupling sound from the physical. Sound production can now be completely indepedent from the physical reality. While we still perceive based on physical experience we can create sound objects with no parallels in reality, interact with instruments that produce different sound effects, adjust the tone and color of sound without a workshop and more.

Most of these are subjects for future blog posts, so we'll focus on a couple things that matter for the Intonal tutorials.

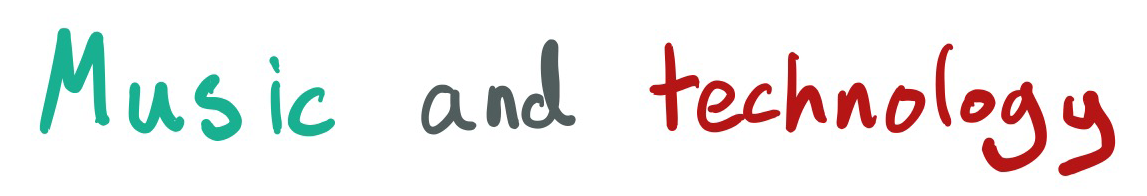

MIDI stands for Musical Instrument Digital Interface. It's simply a protocol for devices to talk to each other. It's defined in terms of a musical keyboard, IE like the keys on a piano or organ. Each key is defined as a discrete pitch, representing a note. To cover music theory in the lightest possible way, an octave is an interval between two notes, where one note is twice the frequency of the other. There are 12 notes inside each octave, the norm these days is to distribute these at equal logarithmic frequencies, as we hear differences of pitch relative to each other.

Of these 12 notes, modern music theory picks 7 of these to form a scale. The 7 white keys inside an octave are one such scale and are named with letters, A-G. The white keys represent a C major scale, and the black keys are named in relation to the white keys (sharp or # for above, such as C#, and flat or ♭ for below, such as D♭). Modern music theory defines A4 (A at the 4th octave) to be 440hz, MIDI defines A4 as the note numbered 69.

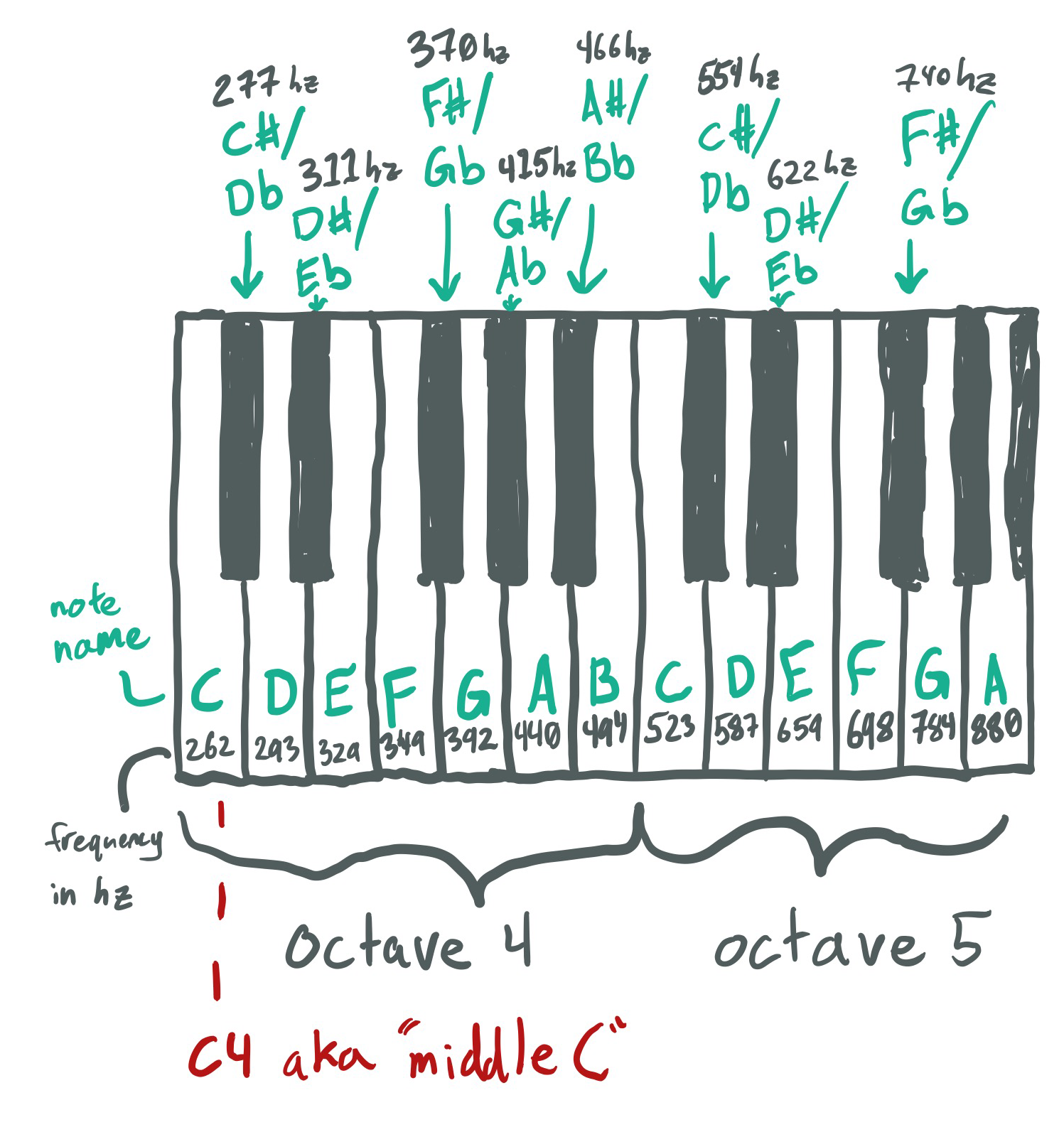

Digital filters are designed to cut specific frequencies. They can also be set to resonant at different frequencies. It's easiest to visualize them in the frequency domain processing noise.

Here is a Lowpass filter, so called because it let's lower frequencies pass through:

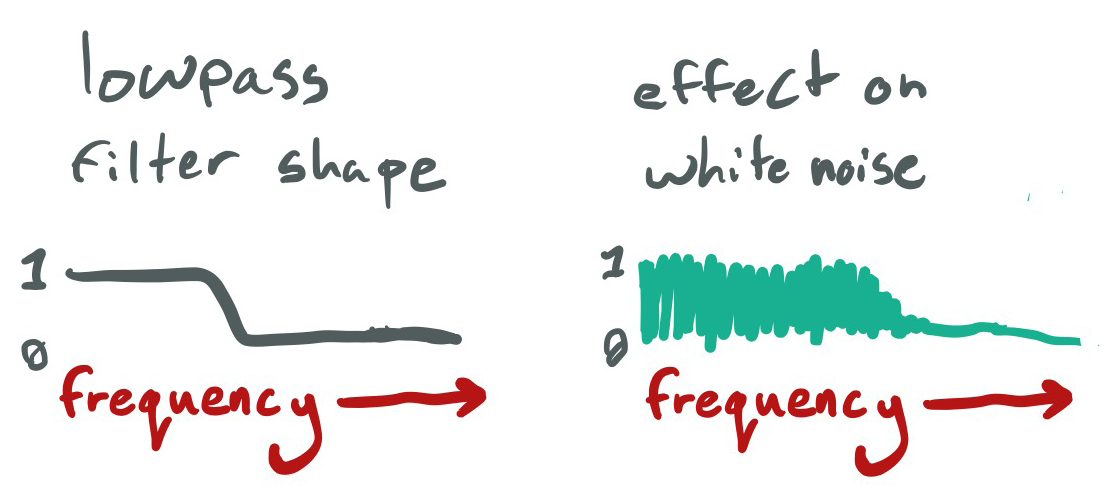

A highpass filter does the opposite of a lowpass:

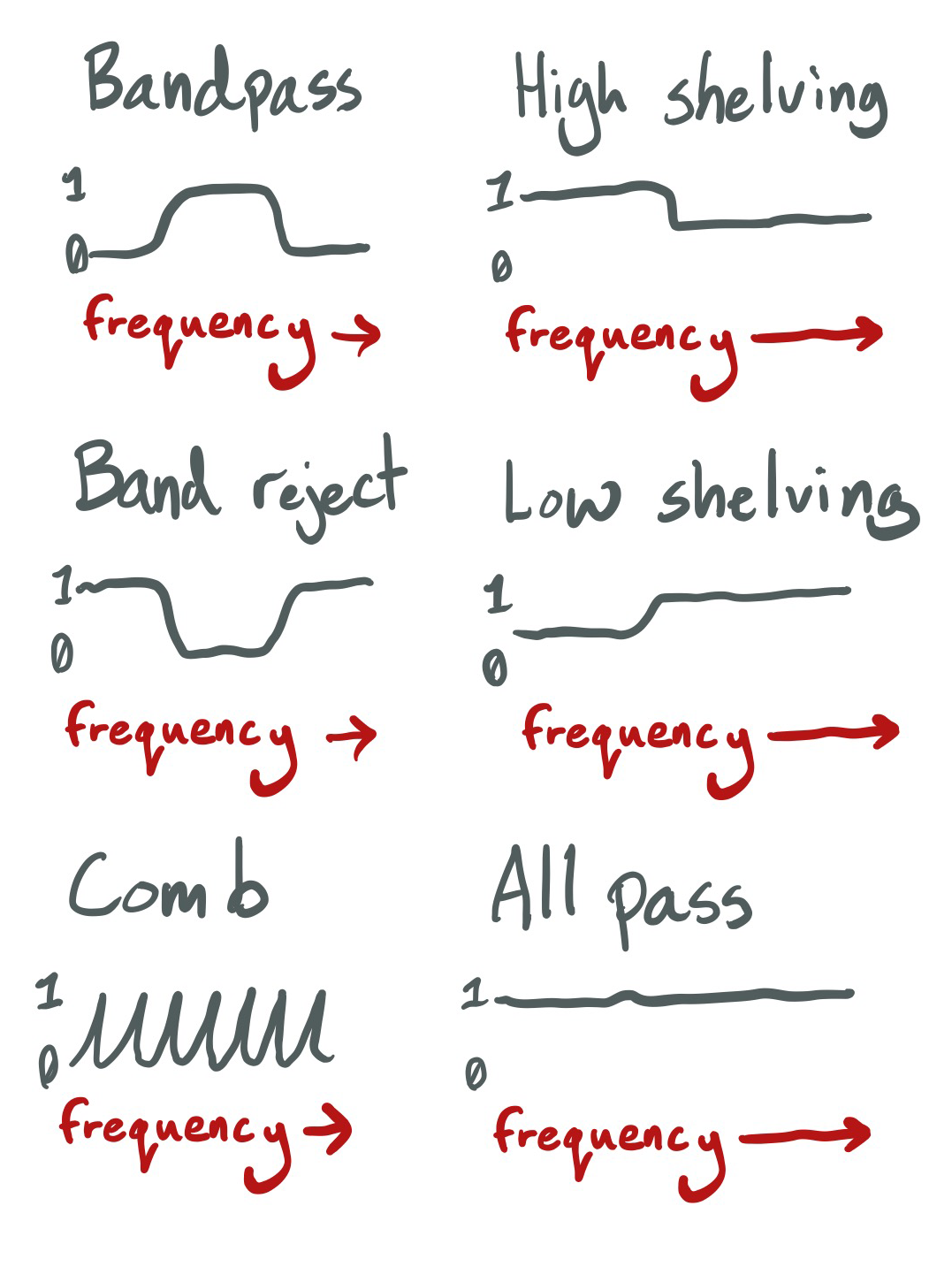

Here are some other common filters:

If you're interested in digging deeper into any of these topics, join our Discord channel and we'll be happy to chat more or point to some more resources! If you came here to learn Intonal, you are now more than prepared to jump into Intro to Intonal, Part 1 (it's much shorter I promise).